Trained deep neural network to classify ASL letters

Summary

I designed a convolutional neural network based off of the AlexNet architecture. Used a kaggle dataset of 87,000 images of American Sign Language (ASL) letters being signed, 29 classes, 3000 images for each class, and each image being 200x200 pixels to train this neural network to classify images of. After loading, examining, and pre-processing the data; I trained the network using 70% of the dataset, continually tested its performance against a validation set of 15% of the dataset to make sure the network maintains a high accuracy without getting too overfit to this data, and finally used a testing set of 15% of the dataset to provide an unbiased evaluation of the final model fit.

I took this project a step further because I wanted to learn how real world AI products were built, i.e. using a trained model to classify new user-generated data. To do this I created a web app where the user could sign a specific ASL letter and then press the Predict button. The Predict button would send that image to server, use the pre-trained model to classify what letter the user was signing, and then display that on the web page.

The link to the web app is in the GitHub repository above.

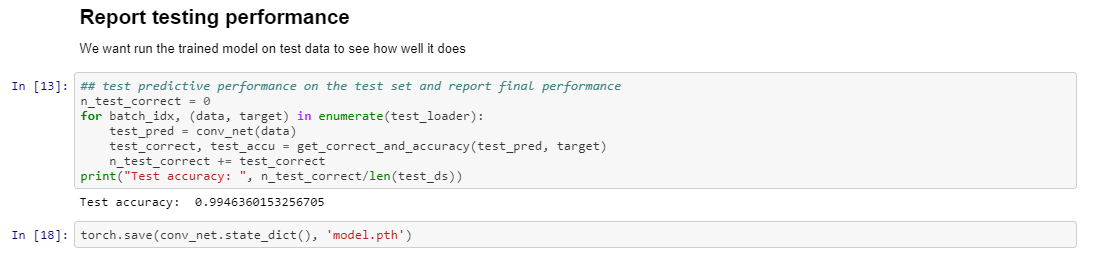

Loading Data into Jupyter Notebook

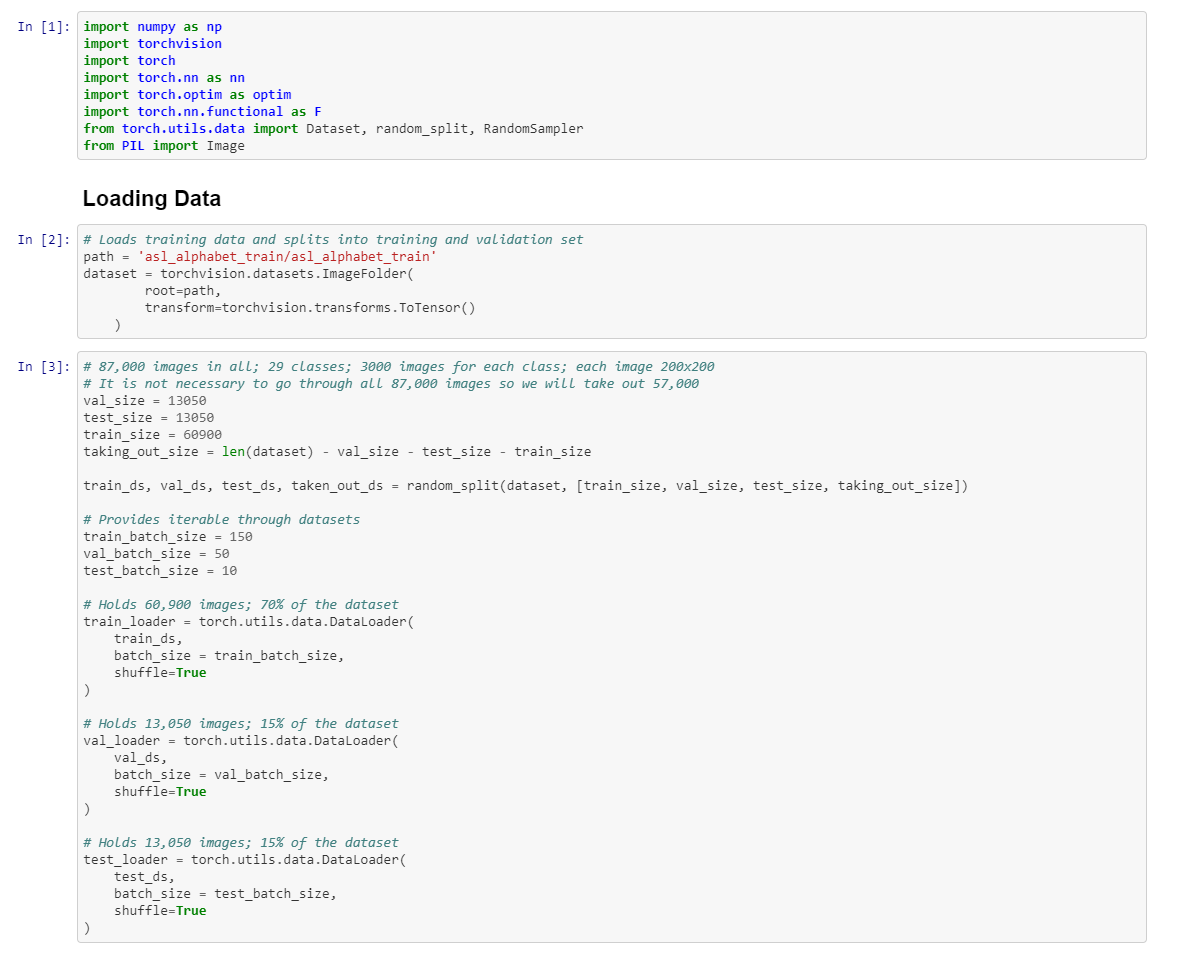

Examples of ASL images that I trained Neural Network on

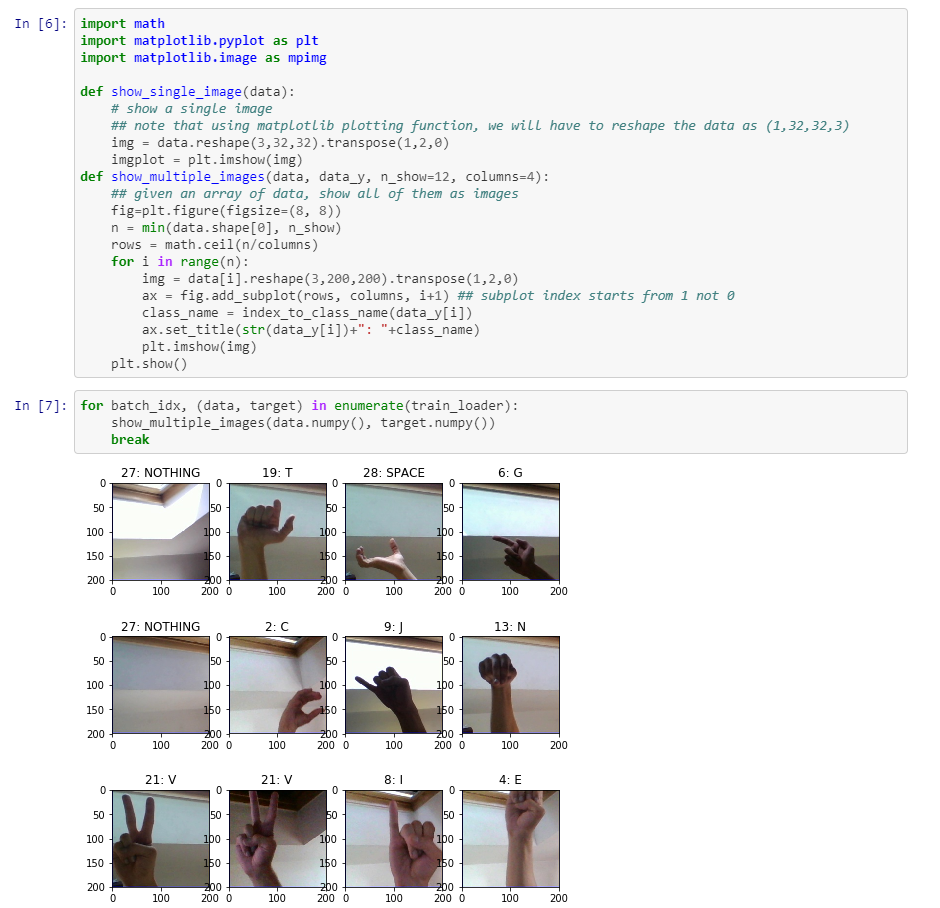

Design of Convolutional Network

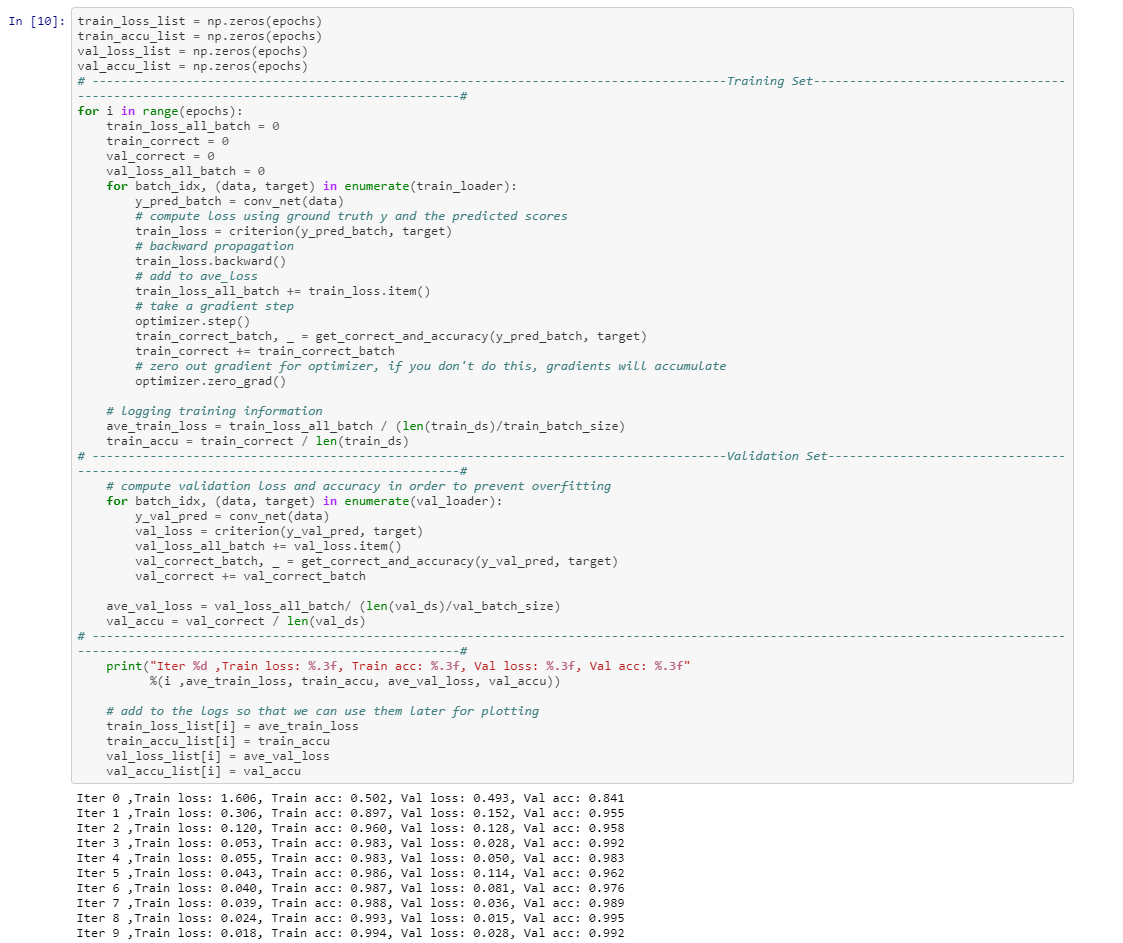

How I trained the Convolutional Network

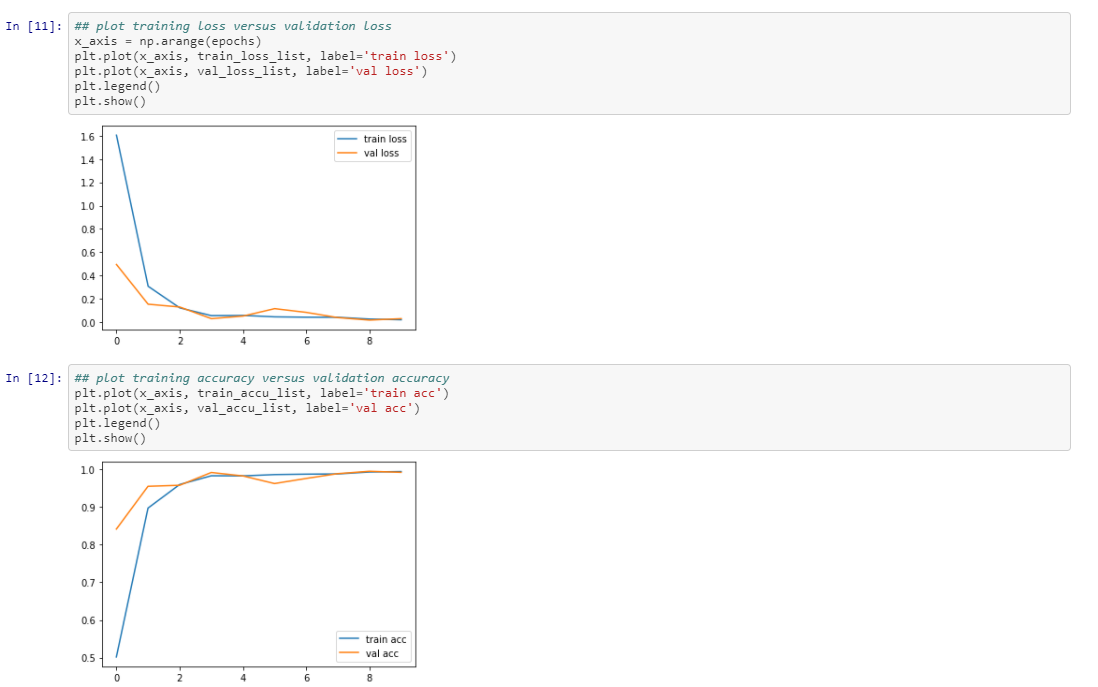

Graph of how training & validation loss fell and accuracy rose over the iterations

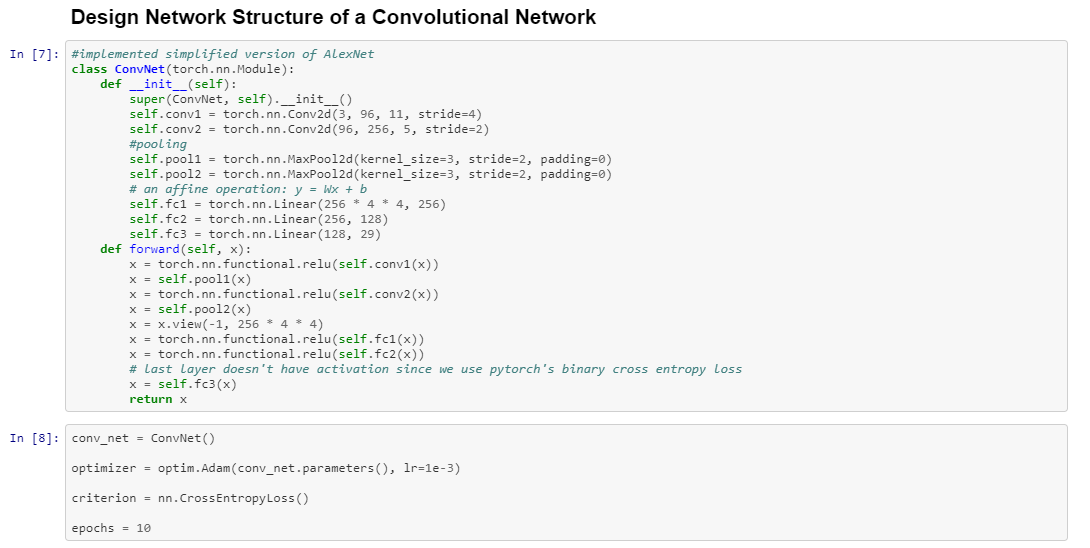

Accuracy of Neural Network on unbiased testing data and then saving the model